Structural changes are often made in the expectation that they will improve how the relevant part of government functions.

The effects of the structural change are often part of, and hard to distinguish from, the wider change process to support that improvement.

-

Start early

The need for evaluation should be acknowledged in the early stages of the decision process, and evaluation planning should begin during the due diligence phase, or as soon as the final decision is made. The evaluation itself should begin during implementation of the change so that baseline data can be gathered and emerging issues addressed.

-

Establish roles and resources

Engage stakeholders

Stakeholders can include ministers, leadership and staff in the agencies under review, and external stakeholders who are affected by the change.

Establish decision-making processes

Determine who is commissioning the evaluation, and who or what group will make decisions about it, including final sign-off.

Determine resource requirements

Establish what resources (time, money and expertise) are needed, and ensure availability.

Decide who will do the evaluation

The evaluation can be done by the organisation that is undergoing the change, by an external agency or consultant, or by a hybrid internal/external group. Consider the following.

- Capacity and capability: do evaluation skills and capacity exist in-house or externally? Is that capability likely to be retained during and after the change?

- Sensitivity: will staff and stakeholders be more open and honest with someone from inside or from outside of the agency?

- Bias: will the people who will be managing or doing the evaluation be heavily affected by the structural change, to the point where it might affect their work?

- Learning: will lessons from the evaluation be more readily used if the evaluation is done in-house or externally?

Even when the evaluation is conducted externally, it should draw on any internal evaluation expertise in the agency undergoing the change. Internal evaluators can alert you to important considerations, and can advise on planning and setting up the evaluation.

-

Define what is to be evaluated and why

Evaluation purpose

The evaluation purpose should describe how the evaluation findings will be used. Evaluations often have one or more of the following purposes:

- to inform decision-making aimed at improvement

- to provide lessons for the wider sector

- to inform decision-making on continuation of the structural change.

For example, the review of Central Agencies Shared Services (Example 1) aimed to improve decision-making and to provide lessons for the wider sector.

Describe the change

Develop an initial description of the structural change that is to be evaluated, including what is changing, the needs it addresses, and the involved parties. It should be straightforward to gather this information from the machinery of government review documents, including Cabinet papers, the regulatory impact statement, the business case and the due diligence.

Use an intervention logic

You should develop an intervention logic diagram of the inputs, activities, outputs and intended outcomes. The documents for the decision process (such as Cabinet papers, business cases and regulatory impact assessments) should articulate the intervention logic, but you may need to extract the information and develop it into a diagram. You can start to develop it by filling in a table like this.

Inputs

What you invest, for example:

- costs of changes in staffing, accommodation, IT, branding, and corporate functions

- indirect costs such as time spent planning, and productivity losses during the change.

Activities

What you did to plan and implement the change, for example:

- initial review

- developing options

- due diligence

- implementing the change.

Outputs

What you produce, for example:

- transfer of functions

- new agency

- disestablished agency

- merged agency.

Outcomes

(short-medium term)Change expected to be achieved in 1–4 years, for example:

- improved efficiency

- greater specialisation or focus

- more effective service delivery, including for Māori or other under-served groups

- better coordination or integration of services and/or policy

- improved clarity of roles and responsibilities

- better governance

- improved responsiveness to government priorities and Te Tiriti o Waitangi | the Treaty of Waitangi.

- better information flows

- better resilience/future-proofing

- improved risk management.

Outcomes

(long term)- The national or community-level changes expected in 5+ years, related to the agency or group’s mission.

An intervention logic illustrates the rationale behind an initiative, but it does not provide enough detail on its own. You should also develop a description of how actions are intended to produce results, the underlying assumptions, risks, and the external factors that influence achievement of outcomes.

The intervention logic covers intended outcomes. You should supplement it by working with stakeholders to identify possible unintended results (positive and negative). This work should be regularly updated as the effects of the changes become apparent.

Find an example of an intervention logic map on page 13 of Impact statement: State Sector Act reform

-

Frame the boundaries of the evaluation

Key evaluation questions

The evaluation may focus on implementation or effectiveness, or both. It can take years for all of the outcomes from structural changes to become apparent, and costs often outweigh benefits in the short term. You may therefore want to focus on implementation and early outcomes during and immediately after the structural change, while setting up to track longer term outcomes over time.

Evaluation focus

Possible evaluation questions

Implementation

- Have the changes been implemented well?

- How could the change process be improved?

- What things have helped and hindered successful implementation?

Effectiveness

- How well did the change achieve its objectives: are the desired outcomes being achieved, to what degree, and at what cost?

- Do the benefits of the change outweigh the costs?

- Are there unanticipated outcomes (positive or negative), such as impacts on other parts of the agency, or other agencies?

- What aspects of the change have been more or less effective, for whom, and under what circumstances?

- What has helped and hindered the effectiveness of the change?

- What could be done to improve effectiveness or value for money?

- What have the outcomes been for the public/service users? Who has benefited or lost out?

- What is the public perception of the change?

Criteria for measuring success

For each evaluation question, you need to determine what achievements will demonstrate success.

For example, if one of the objectives of the change is to deliver better services, determine:

- what services you will look at

- how you will judge if they are better (for example, by measuring client satisfaction, timeliness, other aspects, or a combination of measures)

- what level of improvement constitutes ‘better’ (for example, is it good when the speed of service provision improves by 5% or is a bigger improvement desired? Or should the speed stay the same while other factors improve?).

You can develop the criteria by investigating best practices, and/or by working with stakeholders to document what is important to them. Some criteria should have been developed already, as part of the rationale for the change. Other sources of information on best practices in change management and the qualities of high functioning government agencies include international literature, such as what was used in the evaluations of the establishment of the Central Agencies Shared Services (Example 1) and the United Kingdom Ministry of Justice (Example 2).

-

Example 1. Criteria — Review of lessons from the Central Agencies Shared Services, New Zealand

This review of a structural change examined implementation and progress towards outcomes. The review’s purpose was to inform decisions about the change, and to provide lessons for the wider sector. The Central Agencies Shared Services (CASS) was established in 2012 to provide finance, human resources, information technology, and information management services to the Department of the Prime Minister and Cabinet, the Treasury and the then State Services Commission. In 2013, CASS’ performance was reviewed, looking at:

- its achievements and pace of change towards outcomes and benefits

- factors that contributed to the effective implementation of CASS

- risks to the successful future operation of CASS

- governance or structural changes that could influence future success.

The evaluators developed 11 critical success factors using international research.

Figure 1: 11 critical success factors

Reproduced from (Ernst & Young, 2013)

-

Describe what has happened

What kinds of data to collect

Once you are clear about the evaluation’s questions, and success criteria, you can collect information on inputs, activities, outputs, outcomes, and context. There are many possible information sources and analysing methods. You should consider what’s feasible with available resources, and where possible use existing data (for example, from administrative datasets or performance monitoring).

Data does not just mean numbers. Some things are easy to quantify (such as costs and savings from changes in staff numbers and salaries) while others are not (such as staff productivity, or the effectiveness of a new vision). Your evaluation should use several of the following data sources methods, to develop a well-rounded understanding:

- A review of documents such as Cabinet papers, the regulatory impact statement, the business case for the change, implementation phase documentation, any progress review reports (including Gateway assessments or performance improvement framework reports), relevant policies and meeting minutes, examining:

- what changes occurred and how and when they occurred

- systems and processes and their alignment with best practice.

- Financial metrics including:

- the costs and savings from staff, accommodation, IT, and branding changes

- estimating, or at least identifying indirect costs such staff productivity losses.

The UK National Audit Office (2010) lists types of costs NAO VFM report (HC 452 2009-10): Reorganising central government — Methodology (page 3).

- Organisational performance metrics, paying careful attention to baseline measures before the change, as well as performance during and after the change.

- Human capital metrics such as planned and unplanned staff turnover.

- Collecting data on contextual factors that could influence implementation or outcomes.

- Interviews with staff, leadership, and external stakeholders, seeking to:

- clarify or explain the findings from the document review and analysis of metrics

- gather insights on context, new directions of enquiry and unanticipated outcomes

- generate new evidence, for example, about things that are difficult to quantify.

Unanticipated outcomes can be difficult to identify in advance. They can include effects on other parts of the agency, or the wider system. They can also include difficult to quantify outcomes such as changes in staff attitudes, or new ways of working.

You should include methods (such as interview questions) that look for unanticipated outcomes. Allow for further data collection to check the evidence for those outcomes.

Data churn

Data churn happens when a data series (such as a financial or performance metric) is discontinued or changed so that new data is no longer collected or is incomparable with the old data. This makes it difficult to track changes over time and can create problems for evaluation of structural changes.

Use these strategies to lower the risk from data churn.

- Keep abreast of proposed changes in metrics and escalate any concerns to leadership.

- Document any changes in data collection that occurred during the evaluation.

- For measures that change, create an overlap period, during which data is measured both ways, so that the second data series can be indexed to the first.Sensitivities

The evaluation planning should include how and by whom staff and other stakeholders will be engaged and what sensitivities are likely to arise. It is helpful if the organisation has good feedback loops that give an early indication of potential issues. As well as the uncertainty and workload created by structural changes, people can become personally invested in promoting or resisting the change. People who have recently joined may be embarrassed not to be able to answer questions, and it may not be feasible or appropriate to people who have moved on since the process started.

-

Example 2. Criteria and information gathering — Evaluation of the implementation of change in the Ministry of Justice, United Kingdom

This evaluation asked how well a structural change was implemented. It is a good example of success criteria for implementation quality, and of real-time evaluation to identify emerging issues as the change progresses.

The Ministry of Justice was established in 2007, bringing together agencies responsible for justice issues, and creating one of the United Kingdom’s largest departments. The Institute for Government evaluated the change, with an emphasis on gathering information in ‘real-time’ on how it was progressing.

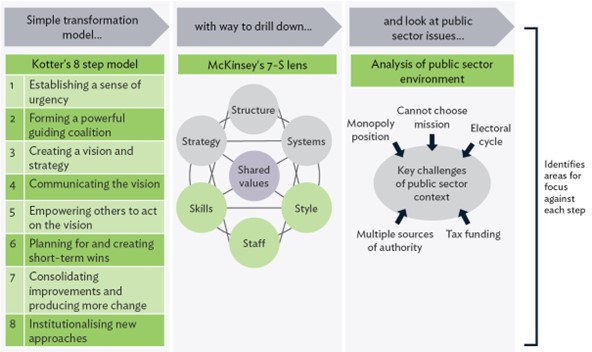

In framing the boundaries of the evaluation the Institute developed success criteria drawing on literature on change management, organisational effectiveness and public sector management.

The Institute combined Kotter’s ‘8-steps’ model (which describes steps in successful transformation) with McKinsey’s 7-S model (to identify the types of demands on the ministry at each step) and with an analysis of differences between the public and private sectors.

Figure 2: Success criteria

Reproduced from McCrae, Page, & McClory (2011)

In describing what had happened, the evaluation used information from:

- interviews and a survey with senior leadership, asking about progress at the 8 steps

- analysis of documentation, looking at how the overall vision and strategy developed

- observation of key meetings and events

- existing survey data and questions that they added to the ministry’s internal surveys.

Multiple data sources were used to assess progress, and data from different sources were triangulated to ensure that robust conclusions and to gain additional insights from comparing discrepancies between sources.

The published report of the evaluation findings describes the ministry’s progress at each of the 8 steps, presenting key findings and recommendations for the ministry’s future.

Evaluation report: McCrae et al. (2011a)

Evaluation methodology: (Gash, McCrae, & McClory, 2011)

-

Understand attribution of outcomes

When analysing outcomes from the structural change, you need to look for evidence that the outcomes were caused by the structural change. Did the structural change contribute to the outcomes, or would they have happened anyway?

Some approaches to attribution use comparison groups, but this is almost never possible for government structural changes. Instead, you should look at whether what you observe is consistent with what you would expect if the structural change contributed to outcomes.

- Look at timing and intermediate steps. For example, if you see improved client satisfaction, did it occur after a service change that could have influenced satisfaction?

- Investigate alternative explanations. For example, is the outcome better explained by technological advances, or perhaps by other public sector or political changes?

- Explore staff and stakeholder perceptions about the extent to which the structural change or other factors contributed to outcomes.

As an example, imagine that two agencies have been merged with the goal of better integrating health services. Population health indicators show improvement, but did the merger contribute to that? You could investigate several lines of evidence.

- In what direction were the health indicators trending before the merger? Was there improvement over and above historical trends?

- Do the health improvements fit the changed services that were put in place? For example, a reduction in rheumatic fever after introduction of initiatives targeting rheumatic fever would support attribution, but a reduction in head injuries would not.

- Does the timing fit? Most outcomes take time to be achieved; was there a reasonable interval between the merger, the establishment of services, and the improved health? If there was no delay, or outcomes preceded activities, the claim of attribution is suspect.

- Compare outcomes between service recipients and non-recipients (the comparison group approach). If the improvements were greater for service recipients than for a similar group of non-recipients, it would support attribution to the service.

- Ask clients about attribution. What effects do they believe the service had on their health or their health-related behaviours? What else influenced their health or behaviour?

- Ask staff about the effect of the merger. Do they think that the integrated service would have been possible, or as effective under the old (non-merged) structure?

- Examine alternative explanations. What else may have affected the health indicators? Other agencies’ initiatives? A mild winter? How credible is the evidence for those explanations versus attribution to the structural change?

- Overall, considering all of these factors, how sure can we be that the merger led to integrated services that contributed to better health?

Contribution analysis is a specific method that you could use, that addresses these issues using a more formalised framework. See Better Evaluation (no date), and Mayne (2008) in the further resources section.

-

Synthesise findings

Your synthesis should be shaped by the evaluation purpose, collating and interpreting findings so that decisions can be made. Synthesis should involve:

- assessment of effectiveness or implementation against the success criteria

- assessment of value for money, cost benefit, or cost effectiveness (this could use tools such as CBAx, from The Treasury)

- describing what has worked and why, and what can be improved

- generalisation of findings into lessons for the wider sector.

-

Report on and support the use of findings

We strongly encourage publication of the evaluation findings as a way to propagate lessons across the public sector, supporting the development of a better, stronger system.